As technology improves, artificial intelligence integrates itself into our everyday lives. AIs wake us in the morning, direct us on our road trips, and answer our questions online. However, a recent scandal in the Twitch community is sounding alarm bells across social media, the dark side of AI rearing its ugly head. The message is clear: women are being targeted by pornographic deepfakes, and no one is safe.

What Are Deepfakes?

Deepfakes are ai generated videos and photos in which a person’s face or body has been digitally manipulated. These AIs use deep learning to alter images and audio, creating a realistic likeness so convincing that it can even fool the person being deepfaked. Although deepfake technology has been around since 1997, the term was first coined in 2017 by a Reddit user of the same name as a combination of “deep learning” and “fake news.” As of 2020, over a million deepfakes have been created and distributed across the internet.

Synthetic media isn’t inherently evil. Several creators use deepfake videos for entertainment and parodies. Apps like FaceLike let you swap your picture with female celebrities and streamers in movies, television shows, and music videos.

However, deepfakes are also used for nefarious means. Since 2018, several deepfakes of prominent political figures like Barack Obama, Donald Trump, and Nancy Pelosi have gone viral. Many of these deepfake videos are made to influence voters, bolster propaganda, and cause political upheaval. In 2022, Ukrainian president Volodymyr Zelenskyy announced Ukraine’s surrender in a deepfake video that, although debunked by Western sources, was extensively circulated on Russian social media.

The reach of synthetic media goes beyond politics. As you can see from the Tik Tok above, deepfakes are also centered around celebrities like Tom Cruise. Although that deepfake was not overtly negative, the same cannot be said for deepfakes targeting women. Female streamers and celebrities are routinely on the receiving end of harmful deepfakes, with these images and videos used to humiliate and harass the victims. In January, this was demonstrated through an audio of Emma Watson reading Hitler’s Mein Kampf, which quickly garnered media attention as a convincing deepfake created through ElevenLabs. However, that incident has quickly been overshadowed by the controversy surrounding Twitch streamers Artrioc and Qtcinderella.

Twitch Streamer Atrioc Faces Backlash

Brandon Ewing, better known by his Twitch handle “Atrioc,” found himself at the center of a deepfake controversy following a January 30th stream. During the stream, Atrioc accidentally panned away from his game to his open tabs before quickly going back to the game – but the damage was done. Eagle-eyed viewers froze the frame to reveal that Atrioc had previously visited a deepfake website. Even more damning, the screen grab showed deepfake porn of female streamers Maya Higa, Pokimane, and Qtcinderella.

After further investigation, Reddit users determined that the deepfake site was behind a paywall, meaning that Atrioc shelled out cash to see the graphic content. Qtcinderella was not originally identified as one of the women victimized, but it was later learned that Atrioc had to scroll past her deepfakes to see the nude images pictured. The screenshot of Atrioc’s deepfake porn quickly went viral, sparking debate across social media.

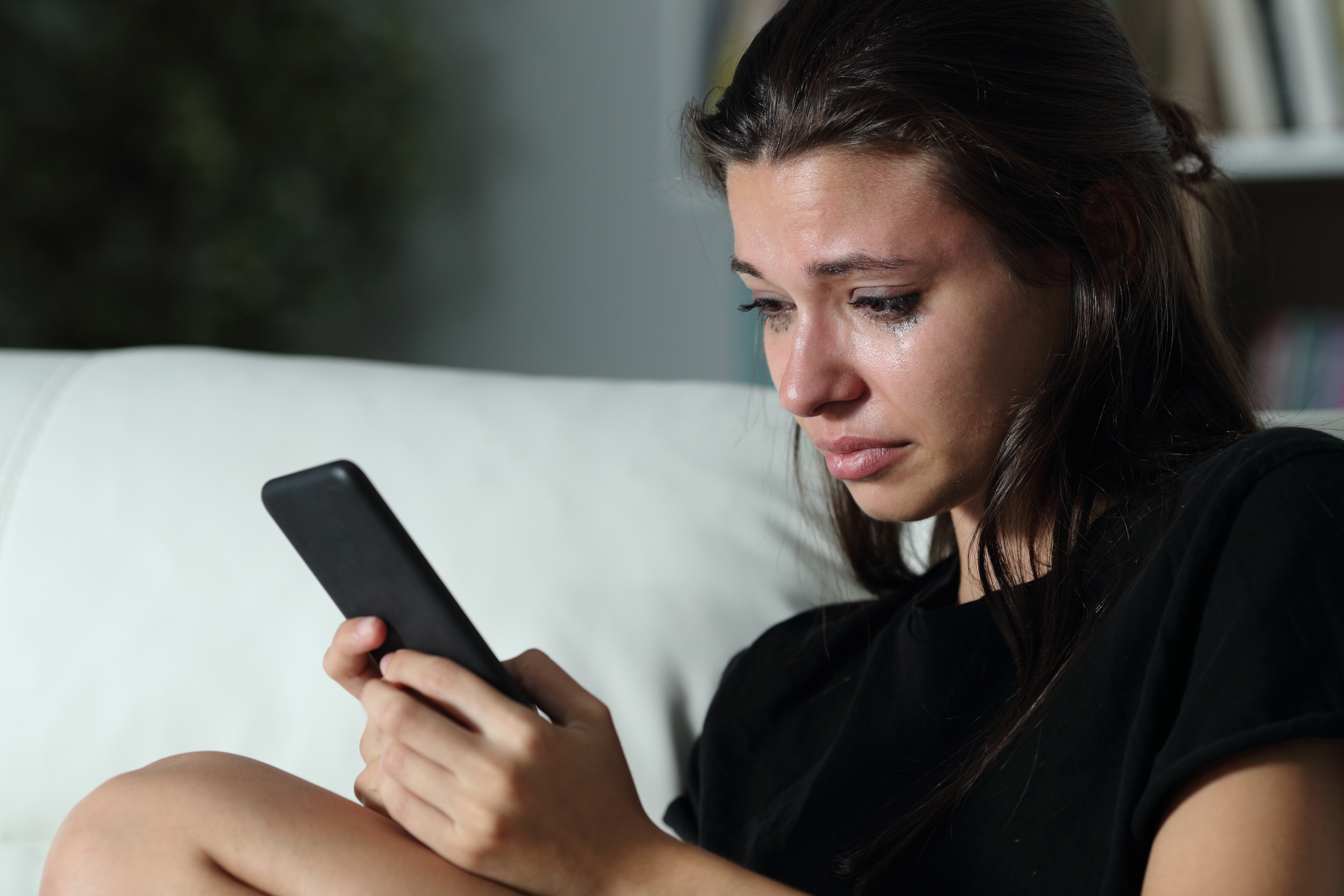

Qtcinderella was the first streamer to respond, both on Twitter and through a live Twitch stream. “This is what pain looks like,” she told her viewers, vowing to sue the deepfake site for hosting non-consensual porn. To make matters worse, Atrioc was not just a fellow streamer – he was also a best friend of both QT and her boyfriend, Ludwig.

Pokimane and Maya Higa later responded via Twitter, Higa stating that the situation made her feel “disgusting, vulnerable, nauseous, and violated.” Higa resented that her unwilling sexualization was overshadowing her numerous accomplishments and animal nonprofit work, made all the more painful as a survivor of sexual assault.

Atrioc later issued a tearful apology over Twitch, claiming that he found the deepfake site through Pornhub advertisements and paid for a subscription of our “morbid curiosity.” He later vowed to help remove the content from the internet.

During a podcast segment, Qtcinderella told viewers that she was no longer on good terms with Atrioc and that her direct messages had been inundated with nude deepfakes of her body since the stream, creating intense body dysmorphia. “It looks convincingly like my body, but it also doesn’t because it’s [perfect], and I know my body’s not [perfect].”

The barrage of harassment and stress quickly took its toll on QT. “For the first time in years, I went into the bathroom and threw up my lunch,” she said.

Since the controversy began, many people have come forward in support of the victims, voicing their disgust at deepfake porn and sharing their own experiences. However, others have diminished the trauma that deepfakes cause, even going so far as to imply that streamers deserve the harassment

“I was molested as a child, and it reminds me of that feeling,” Qtcinderella voiced during the podcast. Sadly, this is not a one-off experience – deepfake pornography is becoming an increasing problem for many women who, like Qtcinderella, have had to deal with the real-world consequences of having their likenesses used without consent.

Deepfake Pornography and its Victims

As deepfakes rise in prominence, so does deepfake pornography. According to deepfake detection company Sensity AI, between 90% and 95% of all deepfakes are pornographic, and 90% of those victimize women. To make matters worse, the availability of deepfakes is rapidly increasing, steadily doubling every month.

Supporters of deepfake porn argue that consuming the content is just as ethical as watching any kind of porn. Several of Atrioc’s fans echoed the sentiment, admitting they also visited deepfake sites and would continue to do so.

Let’s be clear: watching porn is not the problem – watching non-consensual pornography is.

Most explicit deepfakes are created without the consent or knowledge of the person behind the images. It is no different than leaking someone’s nudes. In fact, fake content has been repeatedly passed off as real footage. X-rated videos of female celebrities Scarlett Johansson and Kristen Bell made the rounds on social media as “leaked nudes” in 2020, with one deepfake video receiving over 1.5 million views.

The problem is so significant that victims can now pay companies to search and scrub non-consensual deepfakes of them from the internet, but this can cost hundreds of thousands of dollars, and it is impossible to remove them all. Not even wealth and fame can completely erase those videos from existence, and thousands still believe the content to be legitimate.

Despite what some think, putting your face on the internet does not mean you agree to be objectified. And it goes without saying that most of us have posted pictures of ourselves online at some point in our lives – and if we haven’t, our friends, parents, and peers often share our images on their own pages.

It only takes one post. As evidence, one journalist found that a startlingly lifelike deepfake can be created from a single 15-second Instagram story. Anyone can become an unwilling porn actor, and deepfake creators are happy to make the nightmare a reality, using photos of women and underage girls for their sexual gratification.

Nonconsensual deepfake porn is a violation in every sense. The societal and psychological effects on victims are numerous. Even if creators post disclaimers that the content is not real, a woman’s face and body are still being used against her will, an experience so traumatizing that some compare it to being sexually assaulted. Without any wrongdoing on their part, women have had their public irreparably damaged, sometimes to the point where they are denied employment. Others have changed their names or left the internet entirely. All the while, deepfake technology becomes more advanced and more accessible to the general public.

Why People Create Deepfake Porn

Not all deepfakes are created equal, and the motivations behind them are just as varied. As we gain more information about the perpetrators and predators behind this content, three reasons emerge again and again: profit, revenge, and control.

Like many porn distributors, the average deepfake website features trailers and clips from longer videos that are behind a paywall. Users pay monthly or yearly subscriptions to receive access to non-consensual content of famous celebrities and figures. Some sites also allow pay-per-post and fulfill requests from subscribers. With rates rivaling common subscription services like Netflix, making deepfakes can quickly become lucrative, especially for creators that take commissions. They profit off the exploitation of women, who have no say or control over their own image.

Unsurprisingly, deepfakes are also weaponized as a form of revenge porn. A woman breaks up with a man, rejects his advances, or disagrees with his political views, and suddenly her face is superimposed onto a pornstar’s body, a deepfake video taking a wrecking ball to her life. In these circumstances, the deepfakes serve two purposes – to get back at the woman for her perceived transgressions and to control her life. Her humiliation is paramount to the predator’s vindication, her silence the ultimate prize.

Why People Watch Deepfake Porn

In spite of the countless articles and reports on the sexual trauma deepfakes cause, millions of people consume ai generated porn. Although you can argue that these viewers simply lack morals, there are societal factors that play a role as well.

Dehumanization of Public Figures and Women

One of the biggest problems behind deepfake viewership is that celebrities are seen as commodities, not people. Because they appear on our screens and in our ads, they are not treated with the same dignity or allowed the same privacy that society affords to the average person. Viewers assume celebrities won’t know or care or believe that they should get over it – it’s part of their job as public figures. It doesn’t matter if they’re uncomfortable, upset, or even underage. To the anonymous incels on the other side of the screen, they’re fair game – especially if they’re women.

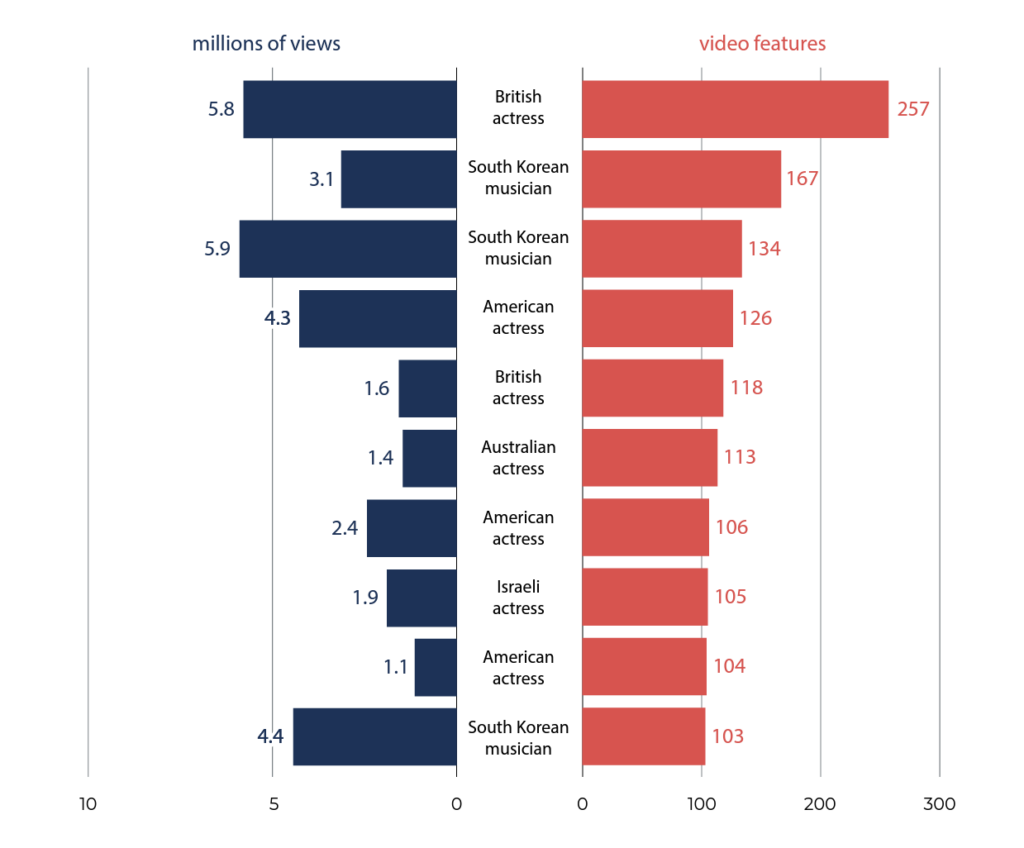

A 2019 report by Deeptrace, a cybersecurity company that studies deepfake tech, identified the ten people most targeted by deepfakes by their nationality and profession. All were women. At the top of the list, a British actress was the subject of at least 257 deepfake videos for a combined 5.8 million views. That is almost double the population of Mississippi (2.95 million).

The Appeal of Non-consent

Another reason behind the popularity of deepfakes is that some people are aroused by the idea of a nonconsenting subject or partner. Unlike those who engage in consensual non-consent (CNC), viewing a deepfake website doesn’t involve mutual agreement and sexual pleasure for everyone involved. The appeal is that the woman being deepfaked doesn’t know and/or want to be viewed in a pornographic setting. The watcher feels power over the woman, and in some cases may receive sexual gratification from the knowledge that the content disturbs, humiliates, and abuses her. Move over toilet cameras and upskirt pictures – deepfakes are taking over.

Laws Aren’t Keeping Up With Deepfake Technology

The U.S. government has been monitoring deepfake technology for years, but victims of deepfake porn have few legal protections. While most states have laws against the creation and distribution of non-consensual porn, the same cannot be said for laws against deepfakes.

Only four states, California, New York, Virginia, and Georgia, have laws that address pornographic deepfakes. Until the creation and distribution of non-consensual deepfake porn are made illegal under federal law, cases will be thrown out (or not taken at all) based on the technicalities and gray areas that currently exist. In other words, if someone puts your face on the latest Pornhub hit and sends it across the state of Mississippi – good luck filing charges.

How You Can Help Fight Pornographic Deepfakes

Hope isn’t lost. We can’t eliminate deepfakes altogether, but we can still regulate them and punish those who abuse AI technology. Here’s how we take our power back:

Learn How to Spot Deepfake Content

The first step in fighting disinformation is to identify it for what it is. When you’re viewing content you suspect to be a deepfake, pay careful attention to the face. Some deepfakes have discrepancies between the face and the rest of the source material (i.e. other parts of the body). For example, the skin tone may be a different shade or the fake features may seem unnatural or “off” on the original actor’s face shape. The eyes can also give away a deepfake because they look dead or expressionless. The person may also blink too often or not enough.

Another good rule of thumb is to look for blurriness around the face, hair, or skin that doesn’t align with the rest of the environment. There may also be lighting inconsistencies between the person and their environment, like unnatural shadows on and around their body.

As technology advances, visually identifying deepfakes may no longer be feasible. This is why we must convince our government to take action. In reality, we are already out of time when it comes to protecting deepfake victims, and the longer we do nothing, the harder it will be to finally catch up – and stay caught up.

Write Your Representatives

Lobby politicians to create laws that criminalize the creation, distribution, and possession of nonconsensual deepfake porn. The best way to do this is to write your representatives in Congress and urge them to take action. Also increased funding for research into artificial intelligence (AI) and deepfake detection. If enough people speak up, maybe our legislators will finally pass meaningful and enforceable laws that protect us from digital predators.

Support Victims of Deepfakes

It’s important to remember that the victims of deepfake porn need our help. There are organizations like The Cyber Civil Rights Initiative (CCRI) that provide support for individuals affected by non-consensual pornography, including deepfakes. Additionally, educate those around you on the consequences of deepfake technology. The more mindsets we can change, the fewer women will be victimized by the creeps of the world.

Your Most Authentic Sex Life – Deepfake Free

Atrioc is not the first or last person to watch deepfake porn, and soon this scandal will be overtaken by yet another viral deepfake, each more advanced than the last. In the meantime, we can each do our part to combat disinformation and harmful deepfakes by educating others, advocating for deepfake laws, and supporting victims. The Romantic Adventures team will keep fighting the good fight, providing the toys and resources for a happy and healthy sex life.